SEOUL, September 01 (AJP) - The Korea Advanced Institute of Science & Technology (KAIST) has developed an artificial intelligence system capable of restoring sharp images from video footage that is blurred or distorted by fog, frosted glass, or other scattering effects.

The research was carried out by Professor Jang Moo-seok of KAIST's Department of Bio and Brain Engineering and Professor Ye Jong-chul of the Kim Jaechul Graduate School of AI. Their team created what they describe as the world's first "video diffusion-based restoration technology," which uses time-based information to reconstruct video frames that have been degraded during filming.

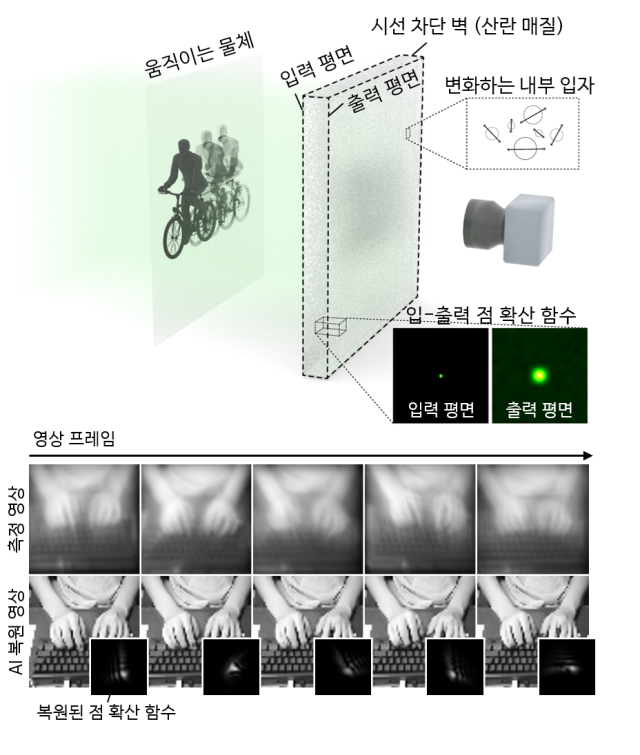

When light is scattered, such as in fog, smoke, or through frosted glass, camera sensors receive jumbled signals, producing blurred or unclear images. The new system learns how video frames change over time and uses that continuity to recover details hidden behind scattering media.

Scattering media are materials that disrupt the path of light and distort visual information. Common examples include fog, smoke, translucent glass, and even biological tissues like skin. The KAIST team's technology can effectively "see through" such barriers, similar to peering past frosted glass.

The potential areas of applications are very wide. The method could be used in medical imaging to examine blood vessels or skin tissue without invasive procedures. It could assist in search-and-rescue operations where smoke reduces visibility. It could also improve driver assistance systems on foggy roads, enable industrial inspections of plastics or glass, and provide clearer views underwater.

Traditional AI restoration methods often work only within the narrow range of data they were trained on. To overcome this, the KAIST team combined physics-based optical modeling with video diffusion models, allowing the AI to adapt to many kinds of image damage. Unlike conventional still-image approaches, their system accounts for how scattering environments change over time, for instance, the shifting view behind a curtain moved by wind.

By training the model to learn temporal correlations between video frames, the researchers achieved stable restoration across different distances, thicknesses, and noise levels. In one demonstration, they were able to observe the motion of sperm cells behind a moving scattering layer, a first in the field.

The study also showed that the same framework could handle other restoration tasks without retraining, including fog removal, image quality enhancement, and blind deblurring of unfocused video. This suggests that the method could serve as a general-purpose platform for image and video restoration.

Researcher Kwon Tae-sung said, "We confirmed that a diffusion model trained on temporal correlations can effectively solve optical inverse problems, restoring data hidden behind moving scattering layers. In the future, we plan to extend this research to more optical challenges, including those that require tracking how light changes over time."

Doctoral students Kwon Tae-sung and Song Guk-ho of KAIST were co-first authors of the paper. The study was published on August 13 in IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), one of the world's leading journals in artificial intelligence.

The work was supported by South Korea's Ministry of Science and ICT, the National Research Foundation of Korea, Samsung's Future Technology Development Program, and the AI Star Fellowship program.

Copyright ⓒ Aju Press All rights reserved.