The Personal Information Protection Commission this week announced new measures to safeguard personal data in the AI era. These include giving minors the right to demand deletion of online posts made during adolescence and barring individuals with criminal records from sensitive roles such as CCTV monitoring. The initiative reflects widening gaps in victim protection as generative AI tools become increasingly accessible on platforms including Google's Gemini.

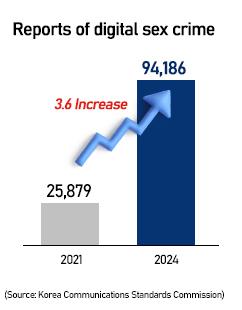

A presidential committee is working to establish a legal basis for victims to demand removal of AI-synthesized content. Deepfakes—digitally altered images or videos that make a person appear to be someone else—are being weaponized in non-consensual sexual material and disinformation. Despite government crackdowns, takedown orders for deepfake sex crime videos continue to climb, according to data from the Korea Communications Standards Commission cited by ruling People Power Party lawmaker Park Chung-kwon.

Public alarm spiked last year after explicit AI-generated images of women and girls flooded platforms such as Telegram, triggering rare police investigations. Yet enforcement remains weak: of 964 deepfake-related sex crime cases reported between January and October 2024, Seoul police made only 23 arrests.

Lawmakers are advancing parallel bills. On Sept. 11, Democratic Party legislator Huh Young introduced an amendment to sexual assault laws, warning that "the rapid advancement of AI technology has made it possible for anyone to create human images indistinguishable from reality, but this technology is being misused to produce fake sexual content that causes shame and disgust, emerging as a serious social problem."

His proposal would criminalize AI-generated sexual content regardless of whether it depicts a real individual, closing a major loophole. But AI developers warn the draft is overly broad and risks stifling legitimate innovation.

Experts say cheap, user-friendly AI services are fueling abuse. "As AI services get cheaper through market competition, more users are piling in—and among them, malicious users are growing," said Park Un-il, professor of AI utilization and data science at Sungkyunkwan University. "Deepfake pornography is becoming disturbingly common nowadays."

Recent incidents highlight that ease of access. Incheon police on Thursday reported investigating a high school student accused of using AI to superimpose sexual images onto photos of four classmates and sharing them online.

Advocates say such cases underscore the need for enforceable "forgetting rights," since traditional policing cannot keep pace with the speed and scale of AI content.

"It's not the technology that should be blamed—it's the people misusing it," Park added. "AI is still in its infancy, and most content lacks copyright protection because responsibility is hard to assign. Korea needs expert teams to craft precise regulations for generative AI."

Copyright ⓒ Aju Press All rights reserved.