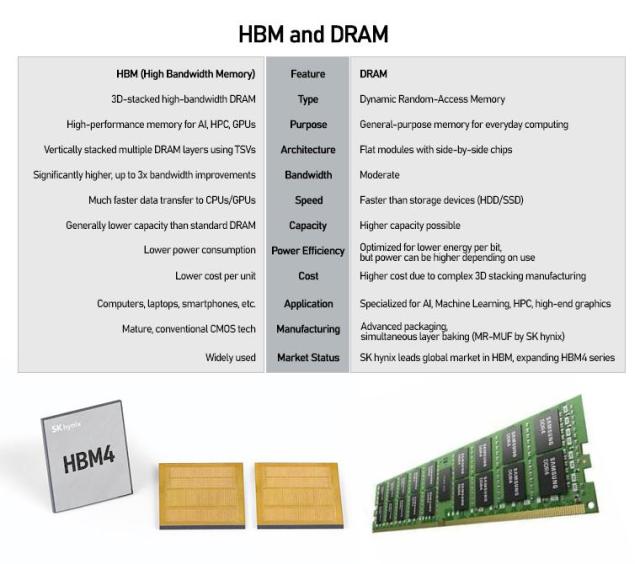

High Bandwidth Memory (HBM) upends that classical design. Instead of laying chips out side-by-side, HBM stacks multiple DRAM layers vertically and connects them with microscopic conduits called Through-Silicon Vias (TSVs). This 3D structure forms an ultra-dense memory tower that delivers dramatically higher bandwidth and capacity within the same or smaller footprint.

The difference is not cosmetic — it is foundational.

AI training and inference shuffle enormous datasets between processors and memory in real time. Conventional DRAM becomes a bottleneck: a single-lane road trying to handle multi-lane traffic. HBM, by contrast, functions like a multilayer expressway, giving GPUs the bandwidth needed to process massive models without choking the system.

Nvidia’s A100 GPU illustrates this shift. Equipped with HBM, it delivers nearly double the bandwidth and memory capacity of its GDDR-based counterpart, the A6000, while maintaining the same physical size. More memory per card means frontier AI models no longer need to be fragmented across multiple GPUs, reducing overhead and accelerating performance.

HBM is expensive — far pricier than DDR memory — but in the AI era, cost per gigabyte no longer determines value. What matters is speed, stability, and total usable capacity. For companies training frontier models, HBM is no longer optional but essential infrastructure.

This is also why SK hynix has surged to the front of the global memory race. Though traditionally quiet and engineering-driven, the company was first to mass-produce every major generation of HBM — from HBM2E to HBM3E — and consistently delivered memory that met Nvidia’s exacting standards for heat management, power efficiency, uniformity, and defect tolerance. Its lead in TSV processing and 3D stacking has translated into higher yields and greater reliability than rivals.

For Nvidia, which cannot risk memory-induced bottlenecks in its flagship AI accelerators, that reliability has proven decisive. SK hynix has become its primary supplier for the H100, H200, and next-generation B-series GPUs.

The combination of early technical leadership, rigorous quality control, and quiet operational execution has allowed SK hynix — long overshadowed by Samsung Electronics in traditional DRAM — to seize the decisive high ground in the AI memory era, powering the company’s record-breaking performance as the world enters a new AI super-cycle.

Copyright ⓒ Aju Press All rights reserved.