SEOUL, November 27 (AJP) - Expectations that Google’s in-house tensor processing units (TPUs) will challenge Nvidia’s near-monopoly in AI accelerators are translating into a bigger market for memory giants Samsung Electronics and SK hynix in Korea and Micron Technology in the United States.

Nvidia still commands an overwhelming share of the global AI accelerator market. But growth of application-specific integrated circuits (ASICs) and neural processing units (NPUs) — including Google’s TPU — is expected to far outpace GPUs in 2026.

TrendForce projects the ASIC/NPU segment to grow 44.6 percent next year versus 16.1 percent for GPUs, signaling a structural shift in AI infrastructure spending.

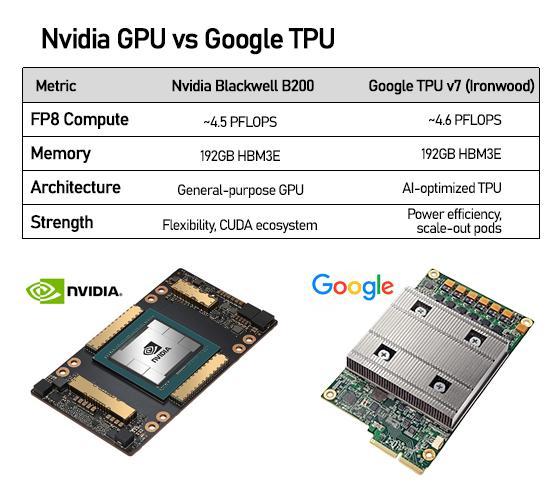

Nvidia’s strength remains anchored in its CUDA software ecosystem and rapid hardware-refresh cadence, which allow its GPUs to handle virtually all AI models with broad compatibility. But custom silicon is gaining appeal among hyperscalers seeking lower power consumption, tighter system-level integration and reduced long-term costs — priorities that grow more acute as AI data centers expand.

Google’s seventh-generation TPU, codenamed Ironwood, marks a significant inflection point.

The chip delivers FP8 performance nearly on par with Nvidia’s flagship Blackwell B200 GPU and matches its 192-gigabyte HBM3E memory capacity. Google also highlights the TPU’s ability to scale across pod-based clusters of more than 9,000 chips — a configuration suited for long-context inference and sustained workloads.

External adoption is strengthening TPU’s credibility. Anthropic has committed to using up to one million TPUs under a multibillion-dollar cloud agreement, while Meta, OpenAI and Apple are reportedly testing or negotiating access to Google’s AI hardware. Such momentum has fueled concerns that Nvidia’s dominance, while intact for now, could gradually narrow as cloud providers diversify their compute stacks.

The stakes are rising alongside AI investment. Combined capital expenditure by major hyperscalers is forecast to reach $602 billion in 2026, up 36 percent from this year, with roughly three-quarters of that spending tied to AI-related infrastructure.

As custom silicon captures a larger share of these budgets, competition among chip architectures is intensifying.

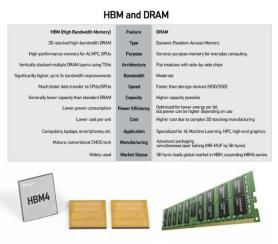

The most immediate ripple effect is in the memory market, where the AI boom largely benefit the memory oligopolies SK hynix, Samsung Electronics and Micron Technology

High-bandwidth memory (HBM) — critical for both GPUs and custom accelerators — is projected to grow 77 percent in 2026 and 68 percent in 2027, according to TrendForce.

As of the second quarter of 2025, SK hynix led the HBM market with a 62 percent share, followed by Micron at 21 percent and Samsung at 17 percent.

Surging demand from U.S. hyperscalers tightened the DRAM race further as Micron climbed to 22 percent while the Korean makers held shares in the 30-percent range in DRAM that includes HBM.

Shares of Samsung Electronics jumped 9 percent this week under Google-driven effect, while SK hynix shares gained 4.4 percent.

Supply is already tight. Both SK hynix and Micron say most of their 2026 HBM output has been allocated, while next-generation products such as HBM4E are expected to dominate by 2027.

Nvidia’s own roadmap underscores the mutual dependence between chip designers and memory suppliers. SK hynix is the primary HBM partner for Nvidia’s upcoming Rubin GPU platform, while Samsung and Micron are racing to secure larger roles in future generations through yield gains and customized solutions.

Beyond market share shifts, geopolitics and energy constraints are reshaping the landscape. Stricter U.S. export controls on advanced AI chips, surging electricity demand at data centers, and government pushes to localize semiconductor supply chains are all nudging cloud companies toward more efficient, tailor-made hardware.

For South Korea, the changing balance presents both opportunity and risk.

The intensifying AI-chip race is driving unprecedented demand for HBM — benefiting all three major memory makers — but also exposing them to sharper policy swings, higher customer concentration and the strategic whims of hyperscalers increasingly committed to designing their own silicon.

As the era of one-size-fits-all AI hardware gives way to a more fragmented, power-conscious ecosystem, the rivalry between Nvidia’s GPUs and Google’s TPUs is set to shape not only the future of compute but the next chapter of global memory competition.

Copyright ⓒ Aju Press All rights reserved.