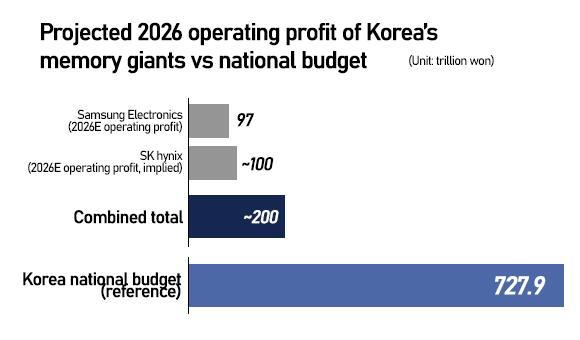

SEOUL, December 15 (AJP) - As Big Tech accelerates the next phase of AI infrastructure — with Google challenging Nvidia through in-house chip design and foundation models like Gemini scaling rapidly — the repercussions are becoming systemic for South Korea’s memory industry. Investment banks now project that Samsung Electronics and SK hynix could together generate close to 200 trillion won in operating profit in 2026, an income pool equivalent to roughly 27 percent of the national budget.

The driver is no longer just high-bandwidth memory (HBM), long viewed as the crown jewel of the AI era. What is reshaping earnings expectations is the spillover of the AI boom into mass-market DRAM, as memory makers prioritize scarce capacity for HBM and squeeze supply of so-called commodity products.

Domestic and global brokerages have upgraded earnings forecasts for both Samsung and SK hynix on signs of customer stockpiling driven by fears of a prolonged memory shortage. Prices of mainstream DDR products have surged to levels that, on a per-gigabyte basis, now rival — and in some cases approach — those of advanced HBM stacks.

KB Securities analyst Kim Dong-won projects Samsung’s operating profit at 97 trillion won in 2026, up 129 percent from this year’s estimate. He expects Samsung’s HBM market share to “roughly double” to around 35 percent, as supply expands beyond Nvidia to a broader pool of hyperscalers and custom ASIC customers.

The call fits a broader market narrative: 2026 could mark an earnings apex as the “GPU-only” AI era evolves into a more fragmented ecosystem. Google’s TPUs, Amazon’s Trainium and Microsoft- and Meta-backed ASIC programs are widening HBM demand across customers — boosting both volume and product mix for Korea’s leading memory suppliers.

Yet an equally consequential shift is unfolding in pricing dynamics for conventional DRAM.

Commodity DRAM closes the gap

Industry data show that the price gap between HBM and commodity DRAM — once four to five times — has narrowed sharply. HBM4, the sixth-generation standard expected to enter broader supply in the second half of next year, is priced in the mid-$500 range for a 36GB stack, implying roughly $15 per gigabyte.

By contrast, as of Dec. 12, spot prices for PC-grade DDR5 16-gigabit products stood at $26.20, or about $13 per gigabyte. Server-grade DDR5 RDIMM 64GB modules have climbed to around $780, translating to roughly $12 per gigabyte. Commodity DRAM has surged to within striking distance of HBM pricing.

The implications for profitability may be even more consequential. HBM requires advanced foundry processes for base dies and highly complex packaging, both of which weigh on margins. UBS has projected that tightening DRAM supply could push DDR gross margins above HBM for the first time in early 2026, marking a rare inversion in the memory hierarchy.

This reflects a supply-driven distortion. As memory makers devote limited cleanroom capacity to HBM, shortages in conventional DRAM are emerging — pushing prices higher across PCs, servers and AI-adjacent applications.

A researcher at the Korea Development Institute (KDI) said the current cycle reflects a structural supply squeeze rather than a temporary demand surge.

“HBM demand will continue, but it does not replace DRAM,” the researcher said. “While DRAM demand remains solid, production lines are increasingly being shifted toward HBM, tightening supply.”

The dynamic, the researcher added, creates a favorable pricing environment in which both HBM and conventional DRAM benefit simultaneously — reinforcing expectations that memory earnings could reach a historical peak.

Strategy shifts at Samsung and SK hynix

The pricing shift is already reshaping production strategies. Samsung Electronics, while maintaining HBM3E supply for Nvidia’s Blackwell accelerators and Google’s seventh-generation TPUs, is simultaneously expanding investment in HBM4, GDDR7 and low-power DRAM (LPDDR5). The aim is to maximize exposure to what analysts increasingly describe as a memory super-cycle, spanning both premium and mainstream products.

SK hynix, long seen as all-in on Nvidia-bound HBM, is also recalibrating. While it continues to focus on HBM3E and prepares HBM4 shipments for next year, the company is sharply expanding production of next-generation commodity DRAM (1c DRAM) at its Icheon campus. Monthly capacity is set to rise to 140,000 wafers, an eightfold increase from current levels.

For investors, this matters because the memory cycle is once again being treated as a macro-level earnings engine, not a narrow semiconductor niche. Analysts increasingly assess Samsung’s recovery not as a simple “DRAM price beta,” but as a test of whether its HBM ramp-up, customer diversification and packaging roadmap can deliver a steeper profit curve — driven less by smartphones and PCs than by data-center capital spending and custom silicon programs.

The bullish forecasts remain execution-dependent. HBM remains not just capacity-constrained but qualification-constrained, with share gains hinging on yield stability, packaging throughput and reliability at scale. Missteps in any of these areas could quickly reshape market shares.

If the cycle unfolds as brokers expect, 2026 would mark a rare two-engine peak for Korea’s chip sector: SK hynix monetizing its HBM lead, and Samsung converting its catch-up phase into market share and margin expansion — with both trajectories increasingly tied to the pace of global AI infrastructure buildouts rather than the traditional consumer-electronics memory cycle.

In the AI era, even “commodity” DRAM is no longer behaving like a commodity.

Copyright ⓒ Aju Press All rights reserved.