LAS VEGAS — Born in the same Taiwanese city and distantly related by blood, Jensen Huang and Lisa Su took to podiums just hours apart on the same day—at different hotels along the Las Vegas Strip—and delivered rival visions for the future of artificial intelligence computing.

The parallel appearances ahead of CES 2026 turned Las Vegas into a split-stage arena, with Nvidia presenting at the Fontainebleau Hotel’s BleuLive Theater and AMD countering from the Venetian.

The message from both camps was unmistakable: the AI chip race has moved beyond individual processors into a full-scale contest over who defines the architecture of the modern data center.

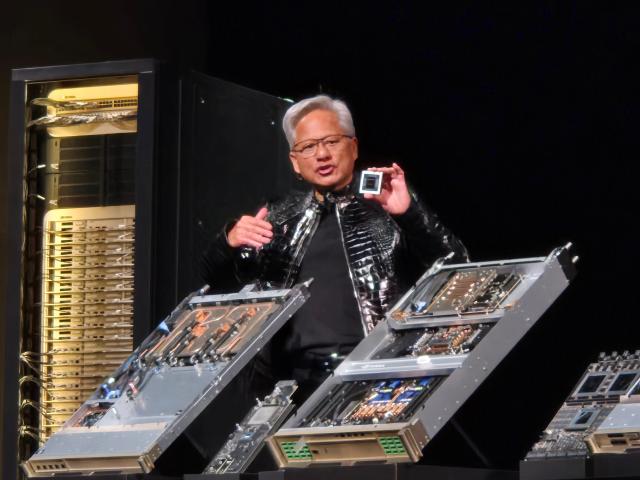

At Nvidia's press conference, Huang unveiled Vera Rubin, the company’s next-generation AI supercomputing platform, describing it as “one platform for every AI.” His argument was that AI computing has moved beyond software into the physical world—autonomous vehicles, robots, factories—where training, inference, and deployment must be engineered as a single, tightly coordinated system.

“The demand for AI computing is going through the roof,” Huang said. “Rubin arrives at exactly the right moment.”

Vera Rubin is a rack-scale system integrating GPUs, CPUs, networking, and data-processing components into a unified platform.

Nvidia said its flagship Rubin NVL72 configuration can cut inference token costs by up to tenfold compared with its Blackwell platform and reduce by four times the number of GPUs required to train large mixture-of-experts models. Huang emphasized NVIDIA’s annual cadence of new AI architectures and its philosophy of extreme codesign across chips, networking, and software—an approach aimed at maximizing system-level efficiency as AI models grow larger and more complex.

Rubin-based systems will be rolled out through partners beginning in the second half of 2026, targeting cloud providers, AI research labs, and enterprise customers running AI at massive scale.

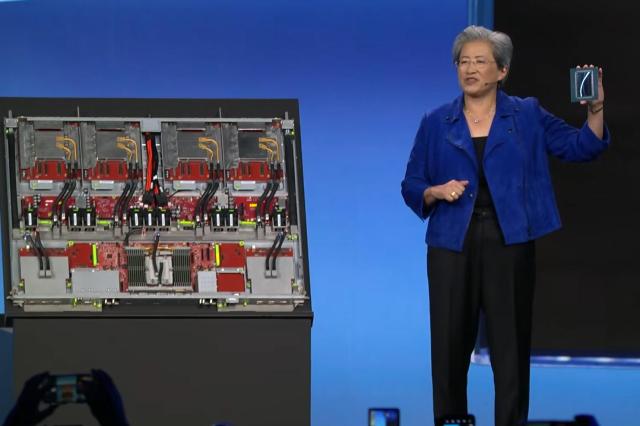

AMD counters with Helios and an open-system pitch

Across the Strip, AMD responded with Helios, its next-generation AI supercomputing platform, unveiled by Su at a conference at the Venetian. Her framing focused less on vertical integration and more on flexibility as AI expands beyond digital applications into factories, healthcare, robotics, and even space systems.

“Physical AI is one of the toughest challenges in technology,” Su said. “It requires systems that can understand their environment, make real-time decisions, and act with precision.”

Helios is built around AMD’s Instinct MI450 series GPUs and designed as a rack-level AI system emphasizing large memory capacity, high-bandwidth HBM memory, and open interconnect technologies. AMD positioned Helios for large-scale AI training workloads where memory constraints often become a bottleneck. Su said AMD’s strategy prioritizes openness, allowing customers to expand and customize AI infrastructure without being locked into proprietary architectures.

AMD plans to deploy Helios later this year through partnerships with data center operators and enterprise customers, underscoring its push to establish itself as a core platform provider in the AI ecosystem.

Copyright ⓒ Aju Press All rights reserved.

![[CES 2026] Tactile technology seeks to preserve Japans endangered craft skills](https://image.ajunews.com/content/image/2026/01/09/20260109081719899487_278_163.jpg)

![[CES 2026] Seoul startups vie for attention at CES, powered by student talent](https://image.ajunews.com/content/image/2026/01/08/20260108153955802448_278_163.jpg)