The hiring push marks a significant escalation from earlier years, when recruitment of Korean chip talent was largely confined to memory makers such as Micron Technology and mobile chip designer Qualcomm.

Now, the world's most valuable tech companies are dangling Silicon Valley salaries and equity packages to lure specialists in a technology that has become the linchpin of the AI revolution.

Nvidia, the dominant force in AI accelerators and the largest buyer of HBM chips, is currently advertising positions for senior memory system engineers at its Santa Clara headquarters, offering a base salary of up to $356,500.

The role calls for at least 10 years of proven track record in DRAM design and deep understanding of HBM — a profile that effectively targets engineers at Samsung Electronics and SK hynix, the two companies that control the vast majority of the global HBM market.

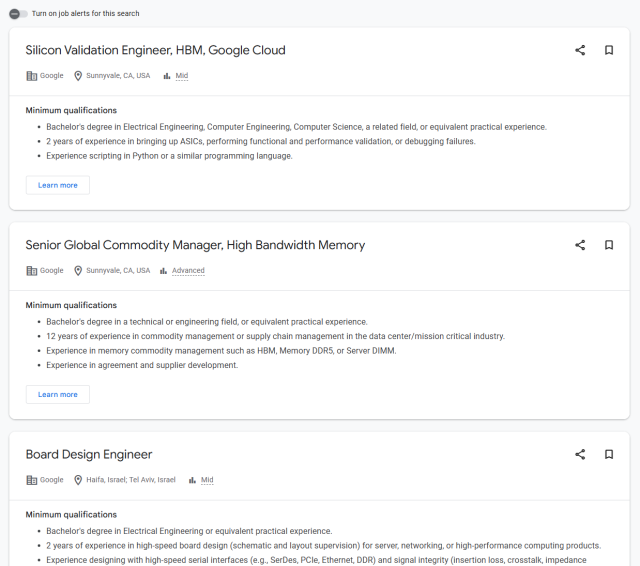

Google and Broadcom, which jointly develop Tensor Processing Units for Google's AI infrastructure, are also hiring HBM engineers in Silicon Valley. Google has posted openings for silicon validation engineers tasked with characterizing HBM operation in test chips and production silicon, while Broadcom is seeks specialists in design-for-test verification across HBM, DDR and high-speed interface technologies.

Tesla has taken the most direct approach. Tesla Korea posted a job listing for AI Chip Design Engineers on Feb. 15, describing the role as part of a project to develop AI chip architecture aimed at achieving the world's highest production volume. CEO Elon Musk amplified the recruitment drive the on Tuesday, reposting the job opening on his X (formerly Twitter) account.

The talent war reflects a structural shift in the AI industry. As tech giants pour hundreds of billions of dollars into data center infrastructure, HBM has emerged as the critical bottleneck. The memory, which stacks multiple DRAM layers using through-silicon vias to deliver vastly higher bandwidth than conventional chips, is essential for training and running the large language models that underpin generative AI.

The Bank of America estimates the global HBM market will reach about $34.6 billion in 2025 and grow to $54.6 billion in 2026, with demand for custom-ordered, ASIC-based AI chips to skyrocket by 82 percent, accounting for around one-third of the market.

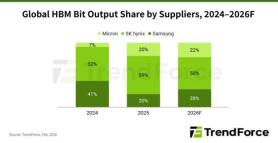

Currently, SK hynix holds a dominant market share of over 50 percent in HBM, with Samsung and Micron competing for the remainder.

The competitive landscape is poised to intensify further with the advent of custom HBM, or cHBM, in which big tech clients design proprietary logic dies tailored to their specific AI chip architectures. SK hynix showcased cHBM technology at CES 2026 in January, and Samsung has reportedly added new engineers to custom HBM projects targeting Google, Meta and NVIDIA. Volume production of custom HBM is widely expected to begin in 2027.

Samsung's semiconductor division, meanwhile, awarded bonuses of up to 47 percent of annual salary for 2025, its highest payout since the AI-driven memory boom began.

Industry observers say the defensive measures may not be enough to stem the tide. The combination of Silicon Valley compensation — which for senior engineers can exceed $300,000 in base salary alone, before stock grants — and the prestige of working on cutting-edge AI systems presents a formidable draw.

Copyright ⓒ Aju Press All rights reserved.